If you’ve read this column over the last few years, you would likely have noticed several themes, one of which is peoples’ remarkable consistency in getting in their own way on the road to success. I’ve written many times that the three most important words a senior leader can say is “I don’t know.” Once you admit that and are open to finding out, your organization can really move forward and people will rally around you as a leader.

But saying I don’t know should necessarily mean that you are willing to accept the truth when evidence is presented. With the information revolution having made virtually any piece of scholarly evidence available within seconds to virtually any person willing to look, it is all the more remarkable that we humans so fiercely cling to beliefs rather than evidence. Instead of leveling the playing field with information and isolating those who refuse to accept truth, the information revolution has only exacerbated the divide and is widening it by the day.

In the Feb 27, 2017 edition of the New Yorker, Elizabeth Kolbert writes “Why Facts Don’t Change Our Minds,” in which she reviews The Enigma of Reason by Hugo Mercier and Dan Sperber. Mercier and Sperber posit that reason developed as a evolutionary trait to help people in community groups to survive within their community rather than to figure out problems. Given the choice between changing your mind based on evidence and going along with what the community thinks, staying true to the group, even if you believe them to be wrong, makes survival more likely, argue the authors.

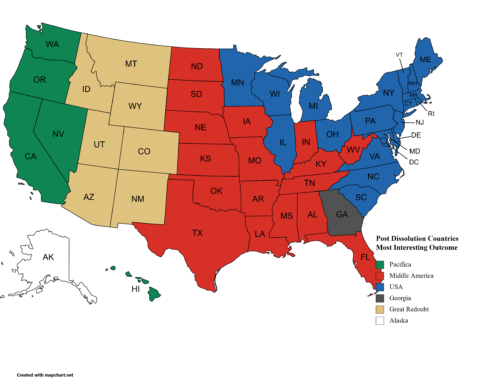

And if you think about today’s hyper politicized social environment with access to news, real or fake, open to everyone, it makes sense that people are choosing their own communities. This is the case in both actual household moves, where people are self-selecting into neighborhoods and communities with like political and social beliefs, as well as on-line virtual communities wherein people get all their information, whether accurate or not, from selective sources which line up with their beliefs.

Writing in The Atlantic, Julie Beck explains that not only do people have a difficult time accepting evidence which runs contrary to their beliefs, but that people are more likely to double down when their beliefs are challenged with clear and solid evidence. This is called cognitive dissonance, the inability to hold two competing ideas simultaneously in mind. This doubling down is a way of reducing the discomfort people have with their dissonance. It is easier to attack the facts as untrue or attack the motives of the researchers than to change your mind when evidence is offered.

Mercier and some colleagues found that when people were presented with their answers to reasoning problems, they were almost certain to be unwilling to change their position even when presented with an analysis of their mistakes. However, if their own answers were presented as someone else’s mistakes, half the participants figured out the switch and wouldn’t change their minds. The other half were very willing to see the faults in others’ reasoning.

This boils down to simple pride in one’s own abilities, regardless of whether that pride is warranted or not. In a 1999 edition of the Journal of Personality and Social Psychology, Justin Kruger and David Dunning discuss the problems of such inflated self-assessment and pride in their article “Unskilled and Unaware of It.” Unsurprisingly, most people overestimate their own abilities. Surprisingly, the top quarter of performers underestimate theirs relative to others, not because they are unaware of their skills, but because they expect others to share the same skill levels. These are very telling findings that should be important to leaders in business, industry and government circles.

In The Logic of Real Arguments (one of the books I recommend all leaders keep near their desk), Alec Fisher offers a key tool in leadership and research methodology called the Assertibility Question: What argument or evidence would justify me in asserting the conclusion? (What would I have to know or believe to be justified in accepting it?)

The Assertibility Question is an outstanding tool to use as a leader mentoring your next generation of executives, as a subordinate gently trying to move your boss to act on evidence, or an interested citizen trying to act on facts and not emotion. If your answer to the Assertibility Question is “nothing,” meaning no evidence will change your mind, then further discussion is pointless. You need to move on to making some really difficult decisions, such as should you continue to work in that organization or what you will do as an organization when everything falls apart based on the empirical, evidential world crushing your belief system.

As Beck writes in The Atlantic, you don’t have to accept evidence in your life. You can build a “pillow fort” to insulate you from facts you don’t want to know about. But as a leader in any business or governmental agency at any level, ignoring evidence and worse yet, trashing it or those who produce it will ultimately hurt you and your bottom line. Do you want to go down as the person who knows everything and is always wrong or as the boss that is always staying ahead by not only accepting the truth as it is, but actively seeking it out and taking the next step of considering the implications of that evidence on your organization’s objectives?

There isn’t anything inherently wrong with being skeptical about evidence that goes against what you think to be true. That is where the Assertibility Question comes in. It may be that the evidence leads to some extremely difficult implications. Steve Levitt faced this issue in his long-running and seminal research on abortion and crime in the US. The fact that it made people on both sides of the political divide uneasy showed that it should be treated with healthy skepticism. But when that evidence held up repeatedly to empirical examination and testing, an honest person would have to admit there is something there. Levitt himself said that he wasn’t trying to say the evidence is good or bad, just that it exists. Opponents of his work repeatedly held up research that contradicted his work, but whose methods were secret or would not be released and thus couldn’t be trusted as valid.

The point here is that in business, in government and in life, we must be willing to accept truth, even if it is uncomfortable. The fact of the matter remains that wearing shorts and a t-shirt because we believe it will be warm today won’t stop it from snowing. Continuing to invest in the market when the evidence is clear that a bubble is unsustainable will not guarantee your financial health because you simply don’t believe the bubble will burst. Going into a business venture because you believe that everyone will want to buy into this great idea despite the market research saying that it isn’t so won’t make it so.

I recommend that if you want to become a truly great leader of a truly great organization, you might consider frequent open and challenging debriefings of your activities and decisions. Before going into a decision, discuss with your team your objectives and the evidence that would impact the decision you are about to make. Then after the process is over, go back and reconstruct the events and review your decisions in light of the situation as it presented itself. Write on the board what you expected to happen ahead of time and what actually happened during the process. Then try to determine the causes of the difference between what you expected to happen and what actually happened. Then take concrete steps to learn from these mistakes so that you can apply them to future decisions. Base it all on evidence and not unfounded beliefs and you will be the model in your organization and your industry.

Ask yourself whether you want to live in the world of truth, or the land of make-believe. Both realms come with their own consequences.

Write me if you need help with this topic. It is a tough one. Few are more important.

Keep thinking.

Leave A Comment